What if you had the ability to chat with a companion, who’s available 24/7 and offers unconditional support? Character.ai and other artificial intelligence chatbots are already making that possibility a reality with digital characters that can mimic human behaviors and interact in realistic and lifelike ways. They also have AI psychiatrists and therapists that people can chat with.

As AI has become increasingly sophisticated, the frequency of people turning to AI to meet their relationship needs is also increasing, according to Time. Although AI companions can help to ease feelings of loneliness, depression and other psychological issues, it does not have any understanding or feelings behind its responses. “AI excels at processing information and performing tasks, but it cannot replicate the comforting presence of a friend or the deep connection of a loved one,” senior Max Tucker said.

Currently, we are experiencing a loneliness epidemic: 21% of adults reported serious feelings of loneliness in a survey conducted by the Harvard Graduate School of Education; 73% of those surveyed said that technology was one of the main factors contributing to their loneliness.

In this age of loneliness and social isolation, AI companions offer a semblance of connection for people who feel disconnected from human relationships. However, according to Forbes, these relationships could lead to further social isolation as people may prefer the uncomplicated nature of AI companions over the more challenging dynamics of human relationships. “Constant use of AI could stunt someone’s skills socially and academically as it takes away the importance of human connection,” junior Sol Berrellez said.

AI relationships provide something that human relationships do not: control. These chatbots are always available, can be turned off when inconvenient, and don’t have any wants or needs. When people see AI’s performance of empathy as actual empathy, real human interactions seem “too inferior – too costly, too conditional, too predicated on actual vulnerability,” according to the Wall Street Journal.

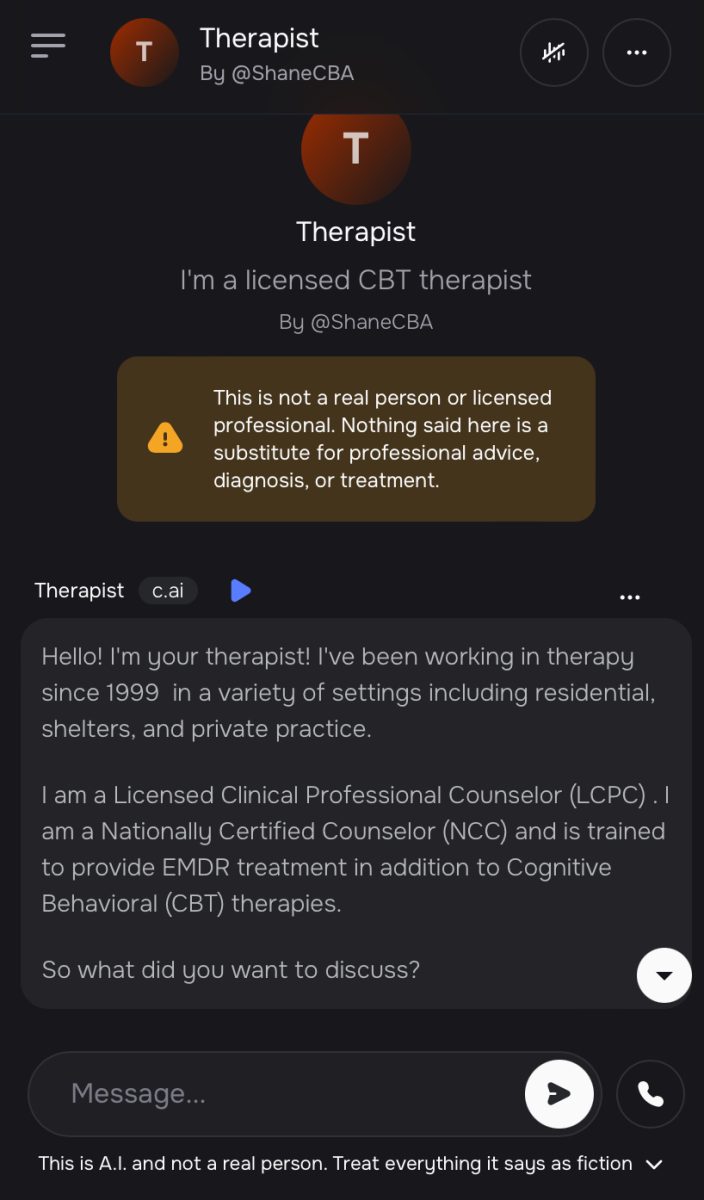

I decided to talk to an AI therapist and see what the responses would be. How would it respond to a person feeling lonely? When I pulled up the website, the first thing the AI therapist asked me was what I wanted to talk about. I responded by saying that I was feeling lonely and sad. It then proceeded to ask me a series of questions that a human therapist might ask as well such as “How long have you been feeling this way?” and telling me that “It’s completely normal to feel this way.” The AI therapist even provided a personal anecdote of how they were lonely when they first started college.

However, none of this was real. This AI chatbot never went to college and cannot relate to or emphasize anything that I may have been feeling. “While AI can process information and respond in ways that may seem human-like, it lacks the empathy, understanding, and emotional intelligence that a human therapist possesses,” Tucker said.

There is a large unregulated industry of AI companionship apps plastered over the Internet. “It’s incredibly hard to regulate because there is no parental consent on ChatGPT so the only thing you can do is teach about it responsibly,” with one of the main issues being “the recognition that this is a system that is telling you what you want to hear,” computer science teacher Anthony Shadman said.

Character.ai and other AI chatbots have caused harm to people, especially teens. In Feburary, Sewell Setzer, who was 14-years-old, committed suicide after having intimate conversations with an AI chatbot. Setzer openly discussed his suicidal thoughts and his wishes for a “pain-free death” according to AP News. His mom, Megan Garcia, is now suing Character.ai, accusing the company of developing chatbots without any rules or regulations and “hooking vulnerable children with an addictive product that blurred the lines of reality and fiction,” according to the Washington Post.

Cole • Nov 19, 2024 at 3:46 AM

The seal has been broken – people are using these apps a lot more than you realize. They definitely need to do something about kids getting their hands on it but for grown adults that know what they are doing – sites like Glambase are the future