The increasing growth in the use and ability of artificial intelligence (AI) in recent years has left people questioning the ways it will alter how we live and work in the future. While it affects every aspect of life, journalism is particularly impacted by this new form of intelligence.

AI is a software that replicates human behavior and thinking, allowing it to be trained to solve problems. As of today, AI comes in different forms. Among them, there is GFP-GAN, a free AI tool that restores old photos within seconds. Notion.ai is a copywriting tool that uses AI to generate high-quality, plagiarism-free content. And most widely known, is ChatGPT, allowing you to type questions and receive customized responses. With AI being a relatively new tool, it is only getting smarter, and the range of things it can do continues to increase.

The rising use of technology has already impacted news organizations long before the popularity of AI. According to the US Bureau of Statistics, newspaper employment has decreased by over 60% since 1990. In more recent years, Buzzfeed decided to use AI-generated quizzes, and the German tabloid Bild laid off a third of its staff, replacing them with machines. However, the most prominent and depended-upon news sources have yet to replace human-generated content with any sort of technology.

While AI is skilled in collecting, organizing and analyzing data, whether it can sufficiently replace human writers is still up for debate. A central argument in the Writers Guild of America (WGA) strike was the need for human writers, as opposed to AI-generated scripts. This argument should extend to journalism, as AI can not successfully produce articles or scripts on the same level as a human journalist. While AI will continue to evolve, it will always lack the human qualities that are central to being a compelling storyteller.

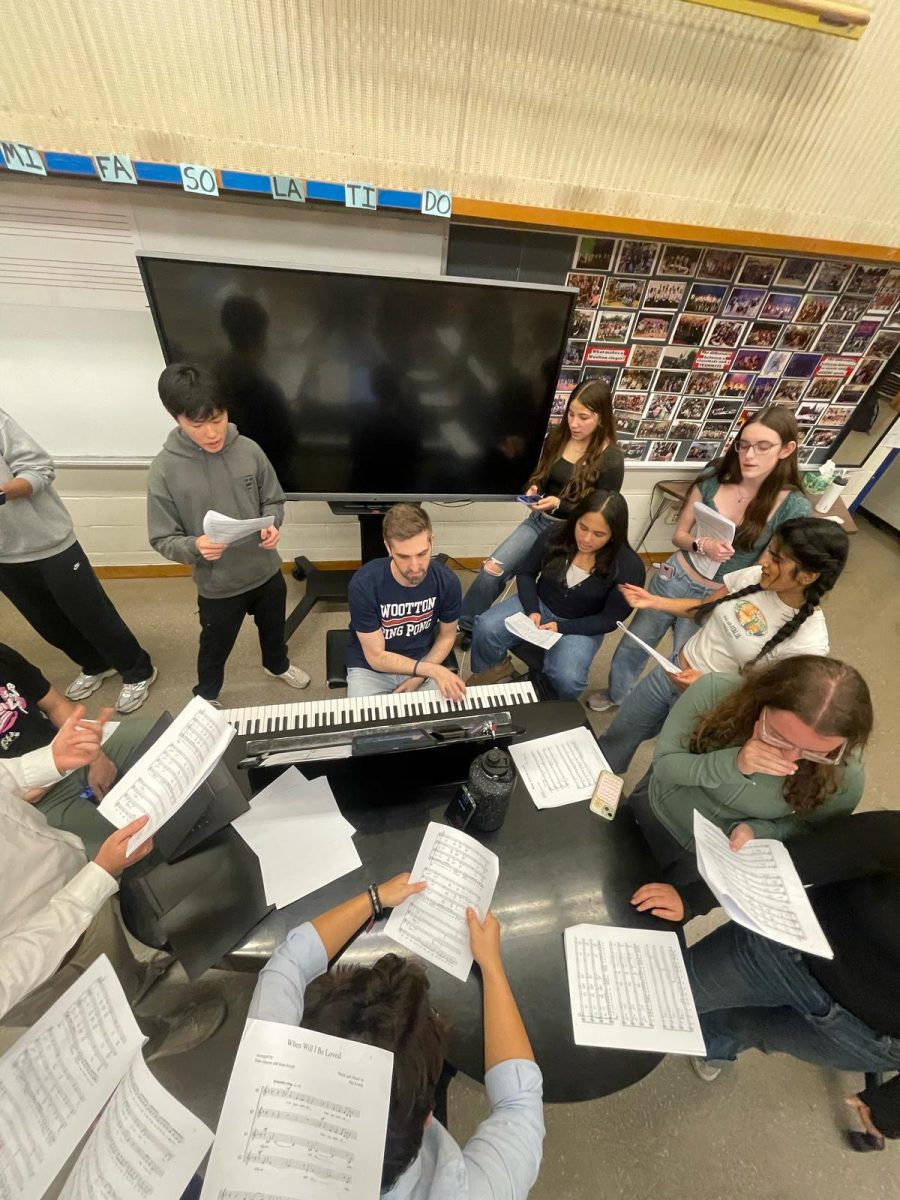

AI may not be able to replace the writers in a newsroom or the anchors on a news broadcast, but there are ways it can be utilized to transform and enhance journalism. As editor-in-chief for Common Sense, I’ve already utilized the AI built into Adobe Photoshop to design and produce our news magazines. As AI seeps more and more into our daily lives, it is important that journalists learn to work with it, rather than against it.

Like businesses, journalism will benefit from AI’s ability to analyze large amounts of data faster than any human. AI data mining systems can be used to alert reporters of potential news stories and identify statistical patterns. AI is also being used in media organizations to transcribe interviews, subtitle videos and analyze audience interests.

Journalism will never exist without humans at the center of the work. Even in a future of prominent technology and AI, humans will still be vital. Humans will still need to be on the ground, investigating and pursuing information for important stories. Humans will still need to have connecting conversations with individuals affected by the stories they plan on telling. Humans will still be needed to convey important information on daily broadcasted shows. Humans will still need to share passionate opinions because passion will never be able to be mimicked by technology. Humans will still be needed to combine data, statistics, and quotes into powerful articles that share untold stories. In the future of journalism, I’m confident that while artificial intelligence may advance, humans will still be needed.

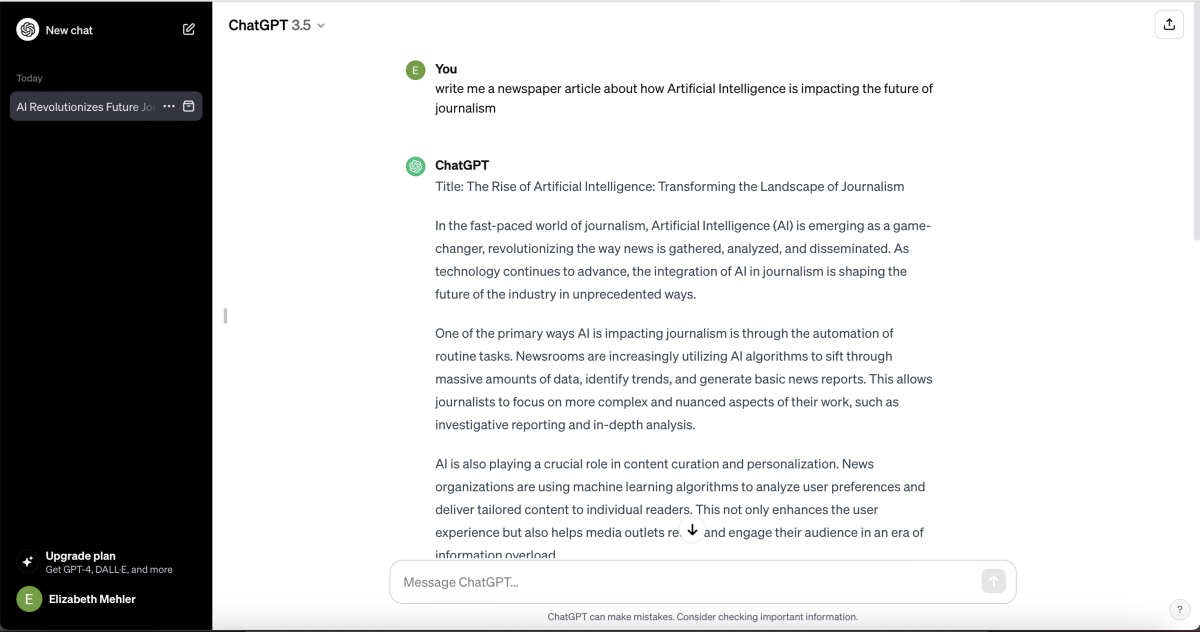

To test this theory, I prompted ChatGPT to write an article about the rise in antisemitism in MCPS, the same article topic written by commons editor Claire Lenkin. ChatGPT began the article with the sentence “In a concerning trend, Montgomery County Public Schools (MCPS) in Maryland are grappling with a significant rise in incidents of antisemitism, involving both staff and students.” While Lenkin began with “3,823 – that is the number of antisemitic incidents that were recorded in the US between Oct. 7 and Jan. 7, according to the Anti-Defamation League.” Lenkin began the article with a compelling and timely statistic that draws the reader in, while ChatGPT just restated the prompt I entered into it.

ChatGPT goes on to write “Reports indicate that various incidents of antisemitic behavior have been witnessed across multiple schools within the county. These incidents range from offensive remarks and derogatory comments to more severe cases of harassment and discrimination.” Lenkin, however, wrote, “On Nov. 13, local news outlets started covering a story about a teacher at Tilden Middle School who had allegedly posted antisemitic content to her social media.” As the articles continue, ChatGPT does not once specify a specific incident, school, or person. ChatGPT even makes up quotes from a superintendent and rabbi, prompting the user to insert a name. Lenkin’s article includes a series of specific incidents throughout MCPS, with quotes from our school’s restorative justice coach and the MCPS teachers’ union.

Lenkin’s article is clearly superior, as it is well-researched, well written and specific to the community it represents. ChatGPT’s article on the other hand vaguely discusses a topic without providing a single fact or new piece of information. But what’s missing the most from the ChatGPT article is the human quality of the writing, which is what captures the attention of the readers. While AI will continue to advance, human-written journalism will remain more impactful.